Whew! This chapter took much longer to process than I had anticipated. It was mainly because I had to stop every few pages to revisit a concept in linear algebra or probability. So I’m listing out those concepts below in the hope that it will be helpful to anyone attempting this chapter.

- Vector subspaces (the column space, row space, nullspace and left nullspace), their dimensions, and the orthogonality relationships between them.

- Expectation and variance of a random variable and the relationship between them (via the second moment).

- Using Jacobians and first-order approximations.

- Covariance matrices as a measure of uncertainty.

- Generalized least squares.

- Multivariate normal distributions, especially anisotropic ones.

Chapter 12 of the book “Introduction to Linear Algebra” by Gilbert Strang is pretty useful for the last three concepts.

Apart from the exercise solutions, there are some notes/proofs that I would like to provide to clarify some of the matter in the text.

-

Derivation of RMS residual and estimation errors.

If you’re wondering, like I did, why the expectation of the squared term equals the variance, this is because of the following rule In this case, the error, by assumption, has zero mean. So, . Hence, we get where is the Mean Square Error or MSE (not root mean square error RMSE). . -

RMS residual and estimation errors for anisotropic error distributions.

The random variable has a distribution1 with degrees of freedom equal to the dimension of the underlying normally distributed random variable ( in this case). The expectation of a random variable is equal to its degrees of freedom. Hence, And the respective RMS errors will be -

Proof of Result A5.2(p591).

Honestly, I didn’t understand the argument for sufficiency in the sketch proof so I’ll provide my own. We need to prove that if , then .- From the definition of , we can see that and as we know that the left nullspaces of and have the same dimension () these two nullspaces must be equal. Furthermore, as is symmetric this is also its right nullspace. Hence

- Now and . As the last columns of contain the basis for the left nullspace of both and , they both must have the same left, and by symmetry right, nullspaces. Hence

- As , we get .

- This further implies that the row spaces and column spaces of the two matrices and are equal as they both are matrices of rank .

- When all four vector subspaces of two singular matrices are the same, they must be the same matrix. Hence when . Note: This does not apply to invertible matrices.

-

Whenever the authors refer to “noise/error of 1 pixel”, what they actually mean is that the errors come from a multivariate normal distribution with zero mean and a standard deviation of 1 pixel in each coordinate.

The main index for all chapters can be found here.

I. Consider the problem of computing a best line fit to a set of 2D points in the plane using orthogonal regression. Suppose that points are measured with independent standard deviation of in each coordinate. What is the expected RMS distance of each point from a fitted line?

The RMS distance of each point from the fitted line is nothing but the RMS residual error of the orthogonal regression estimator.

Following the discussion from Section 4.2.7(p101), we need to define the dimensions of the measurement space and the model to arrive at the answer.

The measurement space clearly consists of all sets of coordinates of points, N and N coordinates, i.e. . The dimension of this space is .

The model in this case is a subset of points in that lie on a line. We only need 2 points to define a line, so the dimension of the model is , i.e. a feasible choice of points making up the submanifold is determined by a set of 4 parameters, .

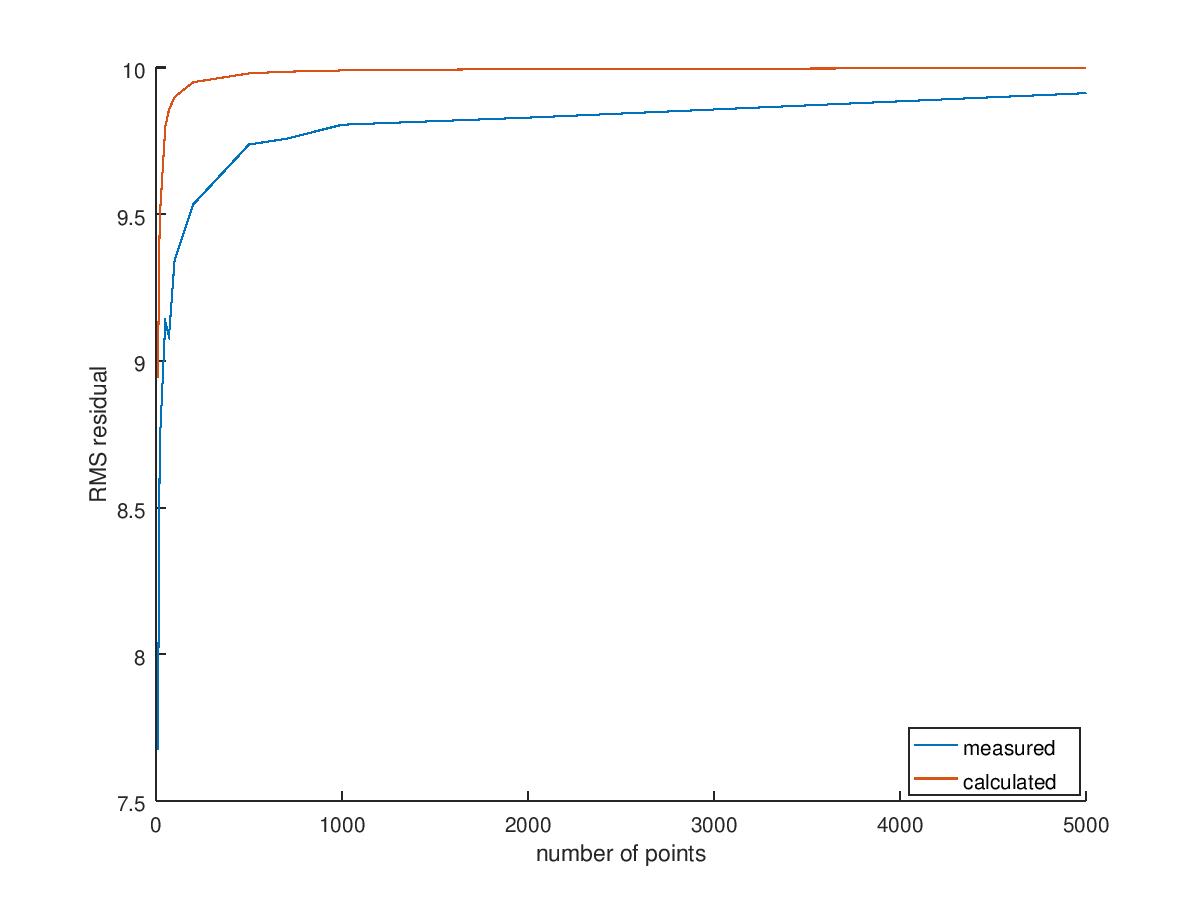

Using these values in conjunction with result 5.1(p136) we get

Just to be sure (and to generate pretty graphs), I also verified this result experimentally in Octave.

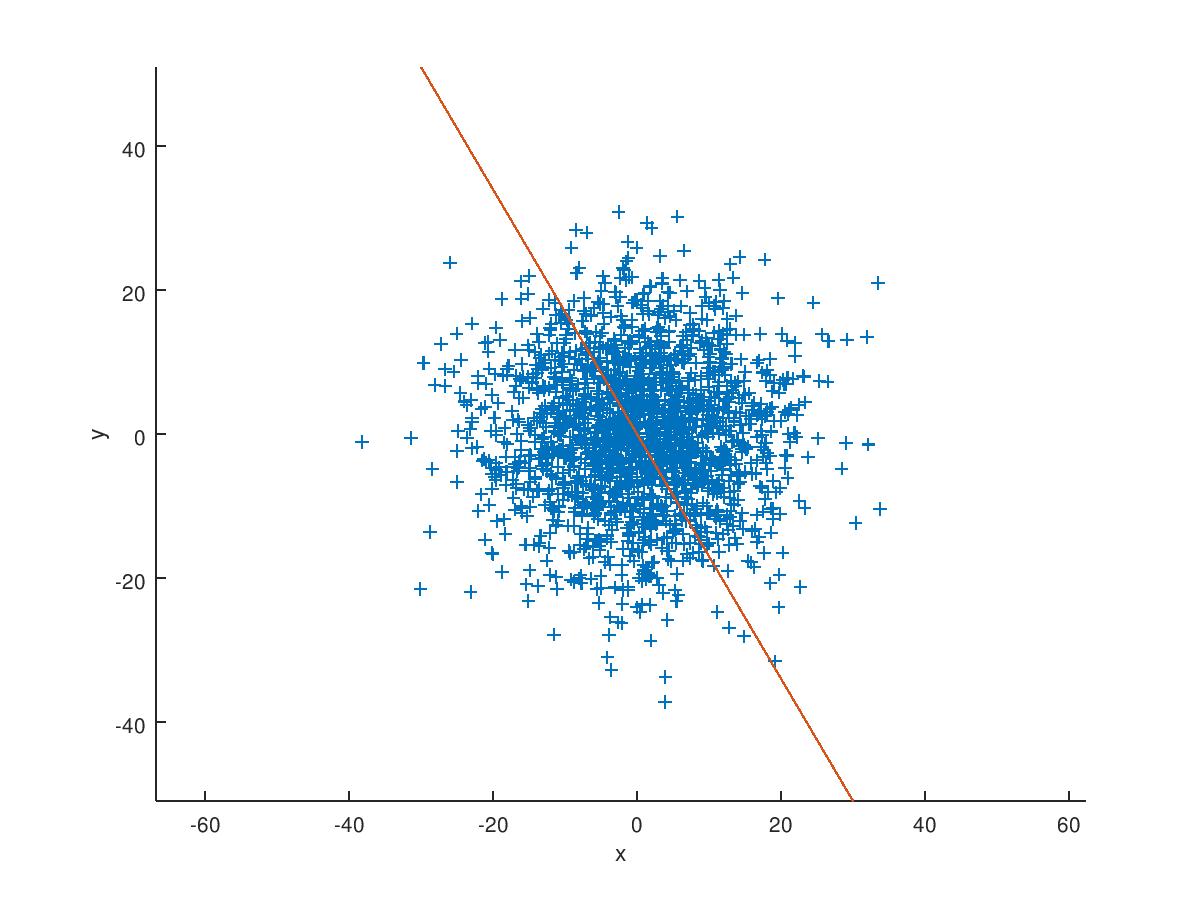

The first graph is just to show you what the best fit line using orthogonal regression would look like for a sample of 2000 points taken from a bivariate normal distribution with zero mean and a standard deviation of 10 in each coordinate.

The second graph shows that the estimated value closely tracks the closed form we just calculated and both of them tend to as the number of points tend to infinity.

If you’re interested in the Octave/Matlab code for this, I’ve uploaded it over [here] (https://gist.github.com/ashimaathri/887b66c85637fc27604ae53cd3dc21d0).

II. In section 18.5.2(p450) a method is given for computing a projective reconstruction from a set of point correspondences across views, where 4 of the point correspondences are presumed to be known to be from a plane. Suppose the 4 planar correspondences are known exactly, and the other image points are measured with 1 pixel error (each coordinate in each image). What is the expected residual error of ?

As this is a problem from a much later section, I do not understand it very well yet and will come back to it later (if I remember to).

References

-

Markus Thill. The Relationship between the Mahalanobis Distance and the Chi-Squared Distribution. https://markusthill.github.io/mahalanbis-chi-squared/ ↩︎